Unconstrained Magnetic Scalar Inversion#

This section demonstrates the processing and inversion of the Forrestania airborne magnetic dataset using purely open-source packages.

For an equivalent and streamlined version, see the parent case study here:

👉 Forrestania Case Study

💡 Note:

The steps taken in this tutorial are strongly based on the official SimPEG Tutorials.

# Import necessary Python libraries for data handling and visualization

import os

import zipfile

from pathlib import Path

from tempfile import mkdtemp

# Import CET colormaps

import colorcet as cc

import discretize

import matplotlib as mpl

import matplotlib.colors as mcolors

import matplotlib.pyplot as plt

import numpy as np

import pandas

import scipy as sp

import simpeg

from geoh5py import Workspace, objects

from matplotlib.colors import TwoSlopeNorm

from PIL import Image

from scipy.interpolate import griddata

from scipy.spatial import cKDTree

# Import SimPEG library

from simpeg import (

dask, # Parallel version of the code

potential_fields,

)

from simpeg.maps import InjectActiveCells

from simpeg.utils import plot2Ddata

# Mira Geoscience specific libraries

from simpeg_drivers import assets_path

Geological Setting#

See the Forrestania - Geology section for more details.

Data Preparation#

In this step, we will import and format the necessary datasets using various open-source geoscience libraries.

Download and Unzip the Dataset#

Before we can work with the data, we need to unzip the dataset package and load the files into usable formats.

The zipped package contains the following files:

Airborne magnetic survey ✈️

60472_AOI4.csvDigital Elevation Model (DEM) 🗺️

Forrestania_SRTM1 Australia_MGA50.tiff

# Create a temporary directory for extracting the zip contents

temp_dir = Path(mkdtemp())

print(f"Temporary extraction folder created at: {temp_dir}")

# Locate the zip file using Mira Geoscience simpeg_drivers' built-in asset_path utility

file = assets_path() / r"Case studies/Forrestania_SRTM1 Australia_MGA50_CSV.zip"

# Print the resolved path to confirm where the file is located on disk

print(f"Dataset path: {file}")

# Extract all contents of the zip file into the temporary directory

with zipfile.ZipFile(file, "r") as zf:

zf.extractall(temp_dir)

print(f"Extracted {len(zf.namelist())} files to {temp_dir}")

# List the extracted files for verification

files = list(temp_dir.iterdir())

print("Extracted files:")

for f in files:

print(f" - {f.name}")

Temporary extraction folder created at: C:\Users\dominiquef\AppData\Local\Temp\tmpdg68vthc

Dataset path: C:\Users\dominiquef\Documents\GIT\mira\simpeg-drivers\simpeg_drivers-assets\Case studies\Forrestania_SRTM1 Australia_MGA50_CSV.zip

Extracted 3 files to C:\Users\dominiquef\AppData\Local\Temp\tmpdg68vthc

Extracted files:

- 60472_AOI4.csv

- Forrestania_Gravity_Station_trim_.csv

- Forrestania_SRTM1 Australia_MGA50.tiff

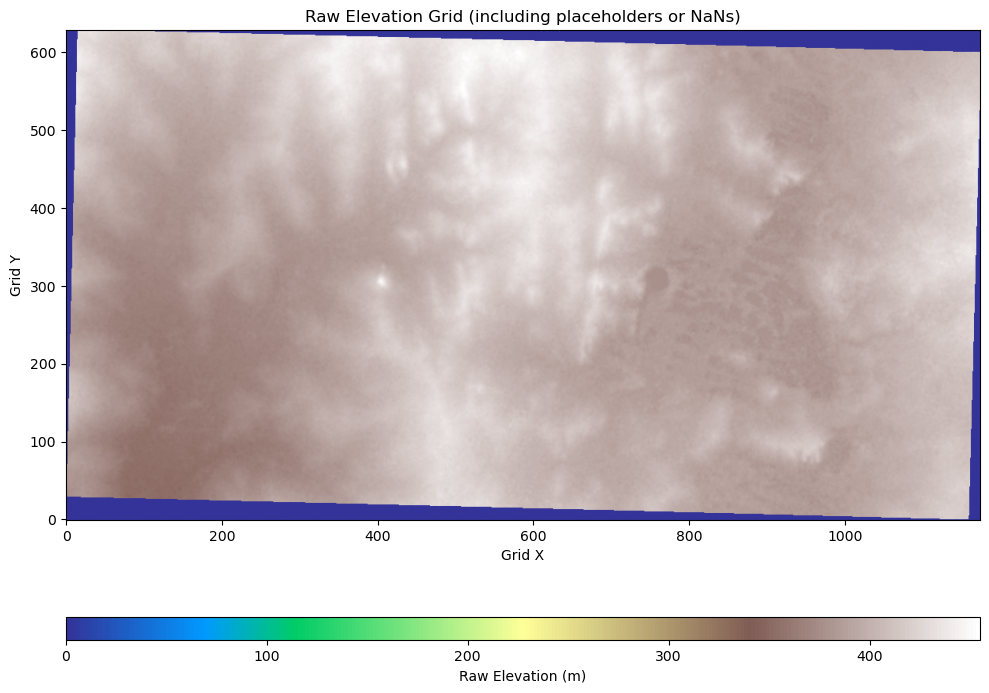

Processing Elevation Data#

In this step, we’ll use Mira Geoscience’s geoh5py library to read and process the elevation data stored in a GeoTIFF file.

Step 1: Convert the GeoTIFF to a 2D Grid#

We’ll leverage geoh5py’s utilities to:

Read the GeoTIFF as a

geo_imageobjectConvert it to a 2D grid of elevation values

Preserve the spatial referencing, including geographic coordinates

This gives us a structured DEM ready for further visualization and interpolation.

# Create a geoh5py workspace (in-memory) for loading the elevation data

ws = Workspace()

# Load the GeoTIFF as a GeoImage object from the extracted files

geotiff = objects.GeoImage.create(

ws, image=str(next(file for file in files if "SRTM1" in file.name))

)

# Register the geospatial reference system using TIFF metadata

geotiff.georeferencing_from_tiff()

# Convert the GeoImage into a structured 2D grid object

grid = geotiff.to_grid2d()

elevations = grid.children[0].values # Flat array of elevation values

# Reshape and visualize the elevation data

elev_image = elevations.reshape(grid.shape, order="F").T

# Plot the elevation data

fig, ax = plt.subplots(figsize=(10, 10))

im = ax.imshow(elev_image, cmap="terrain", origin="lower")

# Add colorbar and annotations

cbar = plt.colorbar(im, orientation="horizontal", pad=0.1, aspect=40)

cbar.set_label("Raw Elevation (m)")

ax.set_title("Raw Elevation Grid (including placeholders or NaNs)")

ax.set_xlabel("Grid X")

ax.set_ylabel("Grid Y")

plt.tight_layout()

plt.show()

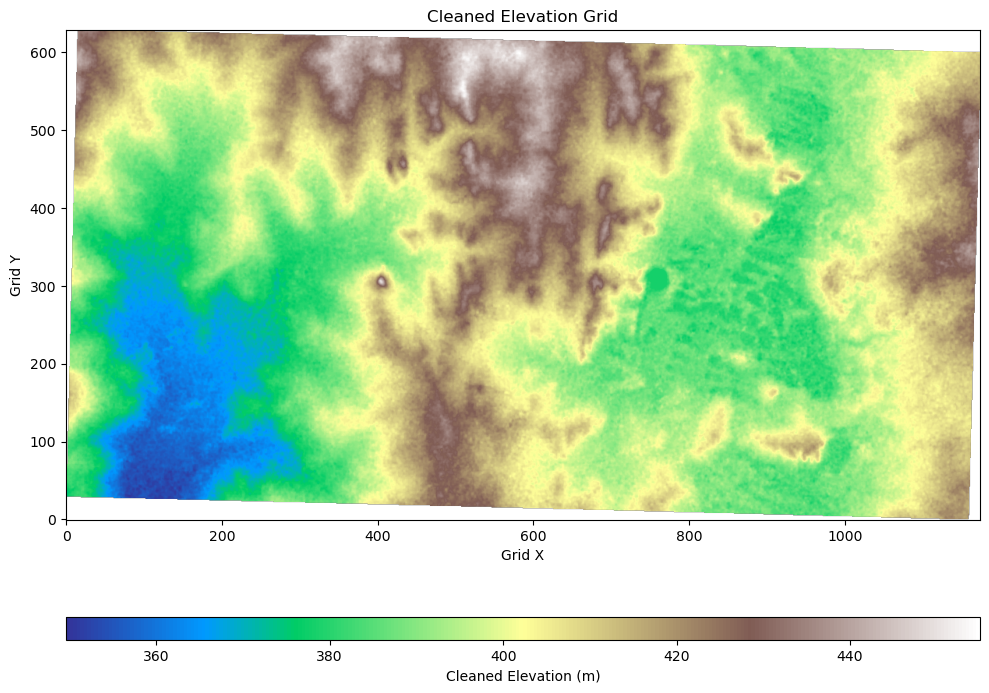

Clean Up Invalid Elevation Values#

We also need to remove the zeros or placeholder values from the rotated DEM.

# Check minimum elevation value

print(f"Minimum elevation value: {np.nanmin(elevations)}")

Minimum elevation value: 1.1754943508222875e-38

# Replace placeholder elevation values (near zero) with NaN

# These extremely small values typically represent "no data" in the raster

no_data_mask = np.abs(elevations) < 2e-38

elevations = np.where(no_data_mask, np.nan, elevations)

# Visualize the cleaned elevation data

fig, ax = plt.subplots(figsize=(10, 10))

cleaned_elev_image = elevations.reshape(grid.shape, order="F").T

im = ax.imshow(cleaned_elev_image, cmap="terrain", origin="lower")

# Add colorbar and axis labels

cbar = plt.colorbar(im, orientation="horizontal", pad=0.1, aspect=40)

cbar.set_label("Cleaned Elevation (m)")

ax.set_title("Cleaned Elevation Grid")

ax.set_xlabel("Grid X")

ax.set_ylabel("Grid Y")

plt.tight_layout()

plt.show()

Final DEM Numpy Array#

With the elevation grid cleaned and spatially referenced, we now combine the X and Y coordinates of the grid centroids with the corresponding elevation values to form a complete DEM numpy array array.

The resulting array has the structure:

# Final DEM array construction: [X, Y, Elevation]

# Combine the grid centroids (X, Y) with the cleaned elevation values

dem = np.c_[grid.centroids[:, :2], elevations]

# Preview the result

print(f"DEM shape: {dem.shape} (rows: points, columns: [X, Y, Elevation])")

DEM shape: (738446, 3) (rows: points, columns: [X, Y, Elevation])

Inspecting the Magnetic Data#

We will use the pandas library to read the magnetic survey data from the provided CSV file.

About the Dataset#

The magnetic data were downloaded from the Geoscience Australia GADDS Portal.

# Load magnetic CSV data file with pandas

mag_dataframe = pandas.read_csv(

next(file for file in files if "AOI4" in file.name)

) # Command 'next' returns the first file that matches the condition.

# Preview the dataset

print("Magnetic survey data preview:")

mag_dataframe

Magnetic survey data preview:

| X | Y | Z | Band 1 | CHORDLEN | COSMIC | DATE | DIURNAL | FIDUCIAL | FLIGHT | ... | THORAW | TIME | TOTRAW | URARAW | X_MGA50 | X_MGA51 | xEAST1 | Y_MGA50 | Y_MGA51 | yNORTH1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 749098.69 | 6419105.5 | 447.29 | 385.29 | 5752.7 | 28.0 | 19880216.0 | 58877.6 | 353.0 | 8.0 | ... | 146.0 | 12272.8 | 2213.0 | 81.0 | 749098.7 | 184334.8 | 748955.9 | 6419105.5 | 6417239.5 | 6418957.0 |

| 1 | 749081.69 | 6419105.5 | 448.28 | 386.28 | 5735.7 | 24.7 | 19880216.0 | 58877.6 | 352.0 | 8.0 | ... | 142.3 | 12272.4 | 2128.3 | 77.2 | 749081.7 | 184317.9 | 748938.9 | 6419105.5 | 6417238.5 | 6418957.0 |

| 2 | 749064.56 | 6419105.5 | 449.19 | 387.19 | 5718.6 | 21.5 | 19880216.0 | 58877.6 | 351.0 | 8.0 | ... | 138.5 | 12272.0 | 2043.5 | 73.5 | 749064.6 | 184300.8 | 748921.8 | 6419105.5 | 6417237.5 | 6418957.0 |

| 3 | 749047.44 | 6419105.5 | 449.19 | 387.19 | 5701.5 | 18.3 | 19880216.0 | 58877.6 | 350.0 | 8.0 | ... | 134.8 | 12271.6 | 1958.8 | 69.7 | 749047.4 | 184283.7 | 748904.7 | 6419105.5 | 6417236.5 | 6418957.0 |

| 4 | 749030.38 | 6419105.5 | 449.15 | 387.15 | 5684.4 | 15.0 | 19880216.0 | 58877.6 | 349.0 | 8.0 | ... | 131.0 | 12271.2 | 1874.0 | 66.0 | 749030.4 | 184266.6 | 748887.6 | 6419105.5 | 6417235.5 | 6418957.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 4037 | 744737.44 | 6416867.0 | 432.49 | 377.69 | 23716.5 | 22.8 | 19880216.0 | 58896.1 | 2806.0 | 8.0 | ... | 113.5 | 5250.2 | 1896.0 | 74.5 | 744737.4 | 180103.8 | 744594.7 | 6416867.0 | 6414759.0 | 6416718.5 |

| 4038 | 744719.31 | 6416867.0 | 432.17 | 377.67 | 23734.6 | 24.5 | 19880216.0 | 58896.1 | 2807.0 | 8.0 | ... | 119.0 | 5250.6 | 1976.0 | 76.0 | 744719.3 | 180085.8 | 744576.6 | 6416867.0 | 6414758.0 | 6416718.5 |

| 4039 | 744701.13 | 6416867.0 | 431.87 | 377.67 | 23752.8 | 26.2 | 19880216.0 | 58896.1 | 2808.0 | 8.0 | ... | 124.5 | 5251.0 | 2056.0 | 77.5 | 744701.1 | 180067.6 | 744558.4 | 6416867.0 | 6414757.0 | 6416718.5 |

| 4040 | 744683.00 | 6416866.5 | 431.69 | 377.69 | 23770.9 | 28.0 | 19880216.0 | 58896.1 | 2809.0 | 8.0 | ... | 130.0 | 5251.4 | 2136.0 | 79.0 | 744683.0 | 180049.5 | 744540.3 | 6416866.5 | 6414755.0 | 6416718.0 |

| 4041 | 744664.50 | 6416866.5 | 432.73 | 378.63 | 23789.4 | 26.5 | 19880216.0 | 58896.1 | 2810.0 | 8.0 | ... | 131.5 | 5251.8 | 2125.0 | 80.8 | 744664.5 | 180031.0 | 744521.8 | 6416866.5 | 6414754.0 | 6416718.0 |

4042 rows × 31 columns

Data Preprocessing#

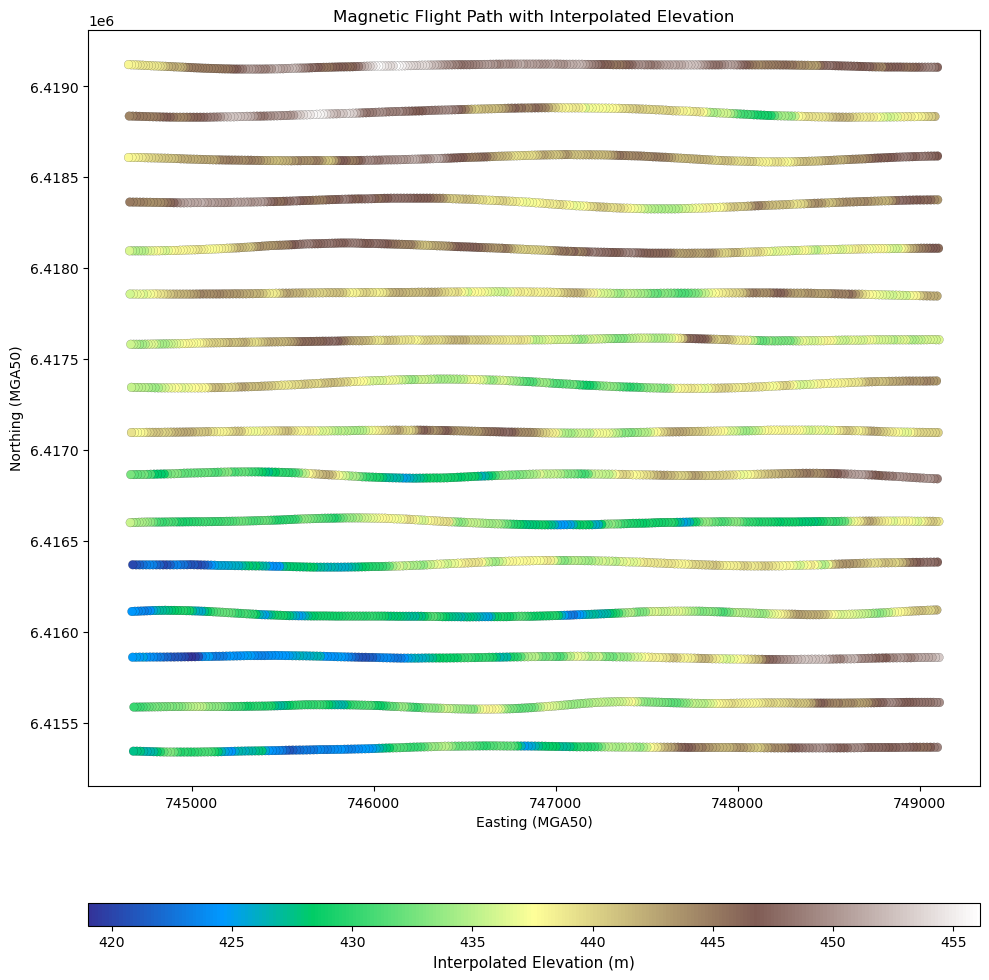

Step 1: Attach elevation to the airborne magnetic data#

The raw CSV contains useful survey information but lacks absolute elevation values. Only the height above ground (terrain clearence) is provided. To add the true elevation to the dataset, we extract terrain elevation from the DEM using nearest-neighbor interpolation, which is fast and sufficient for this purpose.

# Extract relevant columns: projected easting (X), northing (Y), and radar altimeter (height above ground)

mag_locs = mag_dataframe[["X_MGA50", "Y_MGA50", "RADALT"]].to_numpy()

# Build a KDTree for fast spatial search on the DEM 2D coordinates

tree = sp.spatial.cKDTree(dem[:, :2]) # Only XY from DEM

_, ind = tree.query(mag_locs[:, :2]) # Nearest neighbour indices from DEM

# Add DEM ground elevation to RADALT to get true elevation

mag_locs[:, 2] += dem[ind, 2]

# Check elevation range

print(f"Maximum interpolated elevation: {np.nanmax(mag_locs[:, 2])}")

Maximum interpolated elevation: 456.1102294921875

# Plot the survey points coloured by interpolated elevation

fig, ax = plt.subplots(figsize=(10, 12))

sc = ax.scatter(

mag_locs[:, 0],

mag_locs[:, 1], # X and Y coordinates

c=mag_locs[:, 2], # Color is based on elevation

marker="o", # Circle markers

cmap="terrain",

s=40, # Size of points slightly larger for visibility

edgecolors="k",

linewidths=0.1, # Thin black edge for better visibility

)

# Add colorbar

cbar = plt.colorbar(sc, ax=ax, orientation="horizontal", pad=0.1, aspect=40)

cbar.set_label("Interpolated Elevation (m)", fontsize=11)

ax.set_aspect("equal")

ax.set_title("Magnetic Flight Path with Interpolated Elevation")

ax.set_xlabel("Easting (MGA50)")

ax.set_ylabel("Northing (MGA50)")

plt.tight_layout()

plt.show()

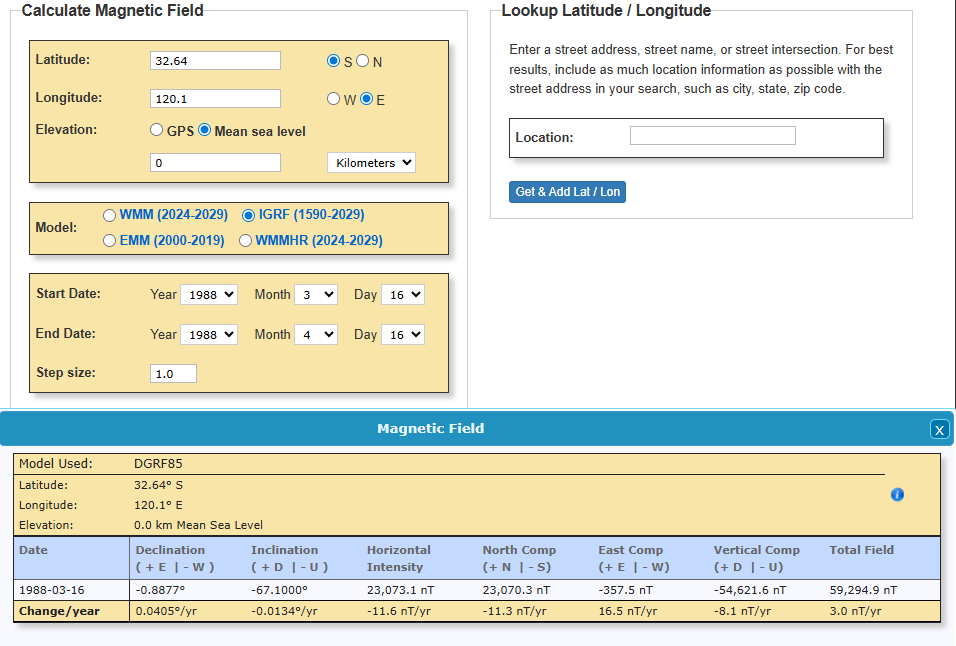

Step 2: Compute Residual Magnetic Data#

Magnetometers measure Total Magnetic Intensity (TMI), which includes both the Earth’s primary magnetic field and secondary fields produced by local magnetized geology (e.g., magnetite-bearing formations).

However, in SimPEG, we must condition the data such that it isolates only the geologic response within the subsurface volume being inverted.

To achieve this, we must remove contributions from the Earth’s primary field, as well as any large-scale regional signals not expected to originate from within the inversion domain.

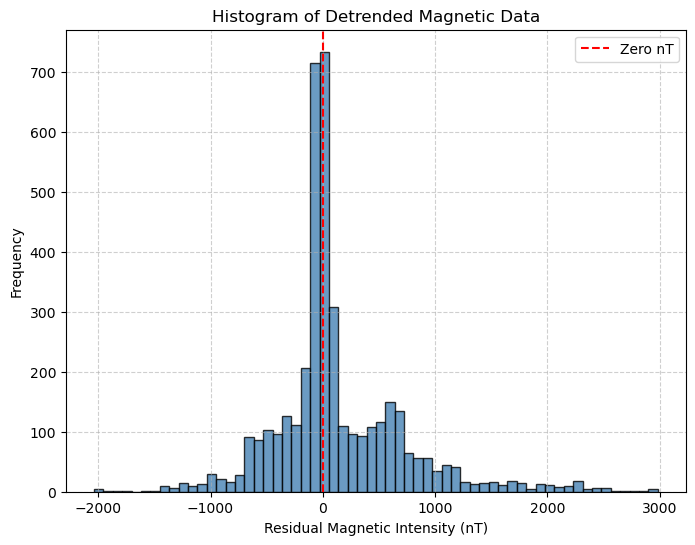

Lookup and Remove IGRF#

The first step is to remove the Earth’s primary field using the International Geomagnetic Reference Field (IGRF).

We obtain the inducing field parameters for the survey location and date from the NOAA Geomagnetic Calculator. For this survey, flown between February and March 1988, the IGRF model provides the following parameters for the survey area:

IGRF parameters at the time of acquisition:

Field strength: 59294 nT

Inclination: −67.1°

Declination: −0.89°

💡 Important:

For a small survey area like this, the IGRF field is nearly uniform. A single IGRF value can be subtracted across the dataset. For larger areas or higher precision, you may compute IGRF at each point.

# Subtract IGRF field strength to get residual magnetic intensity (RMI)

igrf_strength = 59294 # nT

mag_data = mag_dataframe["MAGCOMP"] - igrf_strength

# Plot figure

fig, axes = plt.subplots(1, 2, figsize=(14, 5), constrained_layout=True)

# Plot 1: Histogram of residual magnetic intensity

axes[0].hist(mag_data, bins=60, color="steelblue", edgecolor="k", alpha=0.8)

axes[0].set_title("Histogram of Residual Magnetic Intensity (RMI)")

axes[0].set_xlabel("RMI (nT)")

axes[0].set_ylabel("Frequency")

axes[0].grid(True, linestyle="--", alpha=0.5)

# Show median on histogram

median_val = mag_data.median()

axes[0].axvline(

median_val, color="red", linestyle="--", label=f"Median: {median_val:.1f} nT"

)

axes[0].legend()

# Plot 2: MAGCOMP spatial distribution

sc = axes[1].scatter(

mag_dataframe["X"],

mag_dataframe["Y"],

c=mag_data,

cmap="RdBu_r",

s=20,

marker="o",

edgecolors="black",

linewidth=0.1,

)

axes[1].set_title("Spatial Distribution of RMI Data")

axes[1].set_xlabel("Easting (m)")

axes[1].set_ylabel("Northing (m)")

axes[1].set_aspect("equal")

axes[1].grid(True, linestyle="--", alpha=0.5)

cb = fig.colorbar(sc, ax=axes[1], shrink=0.9, label="RMI (nT)")

plt.suptitle("Residual Magnetic Data Summary", fontsize=14)

plt.show()

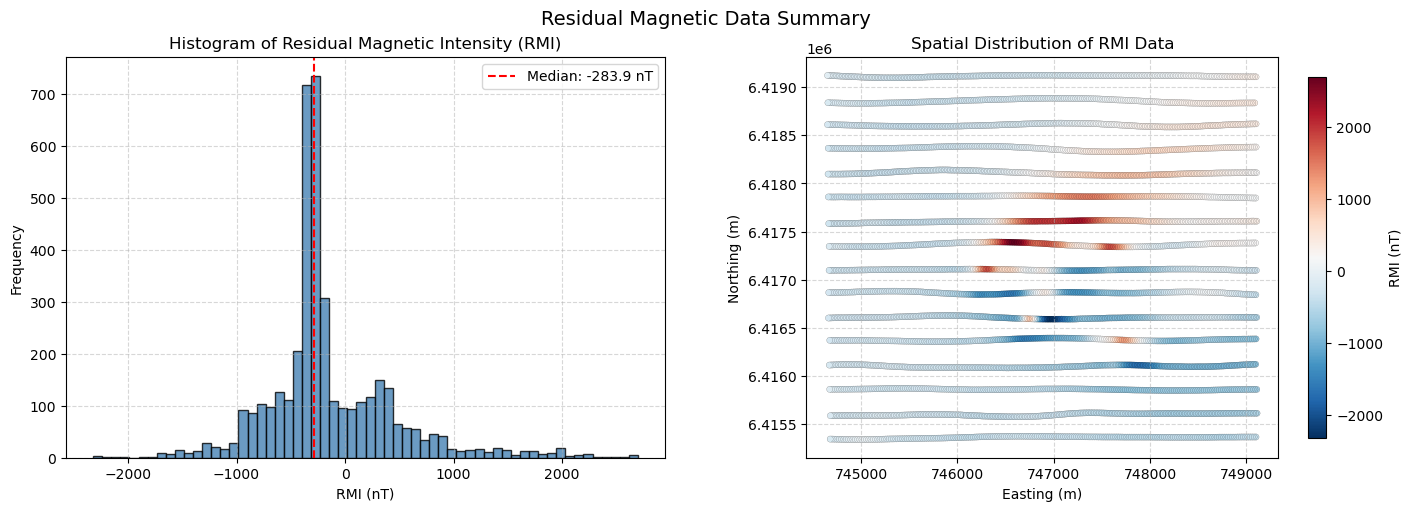

Step 3: Detrend#

The magnetic data still contains a low-frequency regional trend, likely associated with deeper or broad-scale geological sources we are not interested in modelling.

To better isolate the local high-amplitude magnetic anomaly of interest, we subtract the median value from the residual magnetic data.

This:

Suppress background signals from sources that don’t fully reside within the inversion domain

Shifts the baseline so that the background is centered around zero

Enhances contrast of localized features (such as our target magnetic high)

# Detrend by removing the median

mag_data -= mag_data.median()

# Plot histogram of centered magnetic data

fig, ax = plt.subplots(figsize=(8, 6))

ax.hist(mag_data, bins=60, color="steelblue", edgecolor="black", alpha=0.8)

# Show zero line on histogram

ax.axvline(0, color="red", linestyle="--", label="Zero nT")

ax.legend()

# Add plot details

ax.set_title("Histogram of Detrended Magnetic Data")

ax.set_xlabel("Residual Magnetic Intensity (nT)")

ax.set_ylabel("Frequency")

ax.grid(True, linestyle="--", alpha=0.6)

# Confirm new median

print(f"Median after detrending: {mag_data.median():.1f} nT")

Median after detrending: 0.0 nT

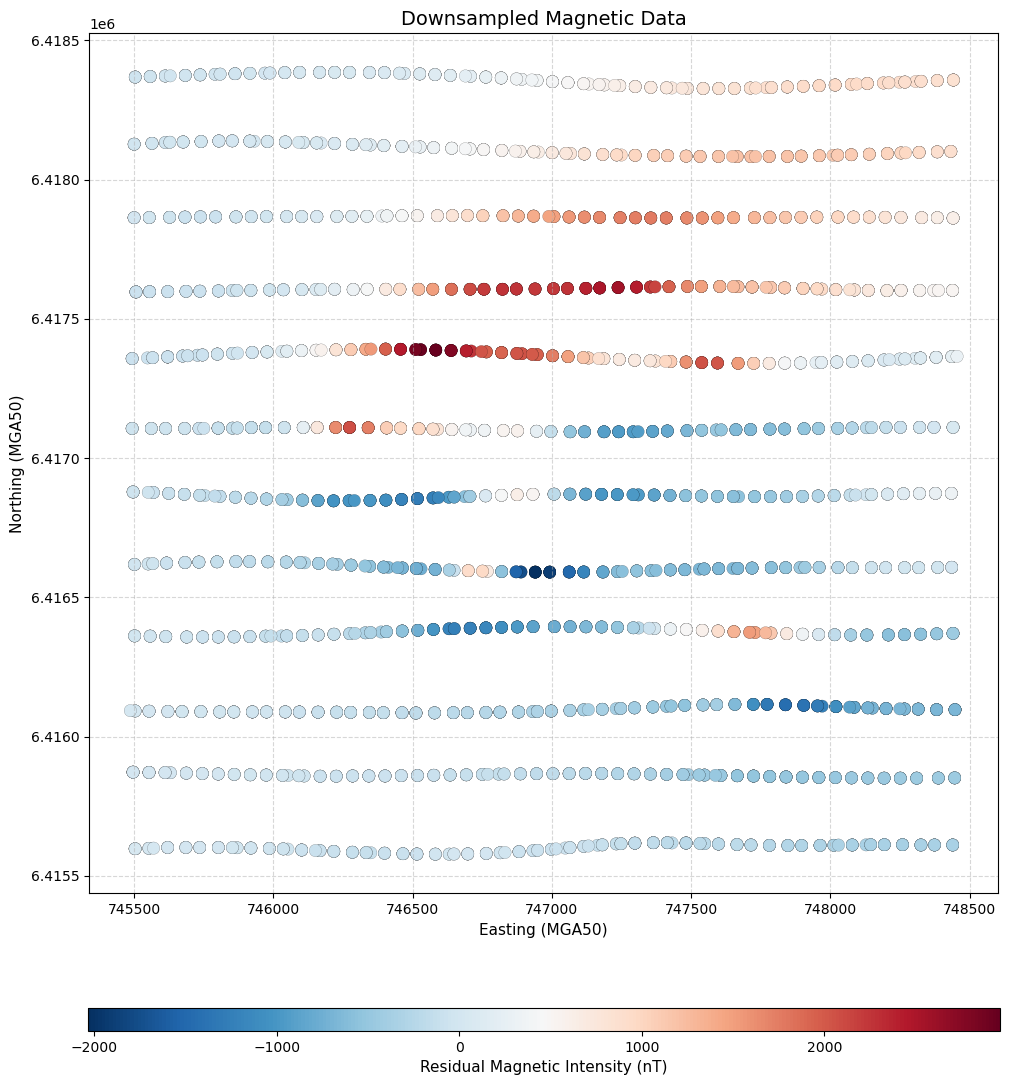

Step 4: Downsampling#

We downsample the magnetic data along flight lines to reduce the computational cost of inversion. Since the average flight height was 60 m, we can confidently downsample to a similar spacing without losing important wavelength information.

This is based on the assumption that the shortest resolvable wavelength in geophysical data is roughly twice the flight height. According to the Nyquist criterion, to preserve these wavelengths, we must sample at least every half-wavelength.

Although we are not directly setting the inversion mesh yet, we use this guideline to inform our target resolution, aiming to preserve all meaningful geological signals from our original dataset during inversion.

Parameter |

Value |

Description |

|---|---|---|

Flight height |

60 m |

Average sensor height above ground |

Shortest resolvable wavelength |

120 m |

~2× flight height |

Nyquist sampling interval |

≤ 60 m |

Required spacing to capture 120 m wavelength |

Target resolution reference |

30 m |

Conservative interval to retain signal content |

Downsampling along flight lines is performed by sampling the data over a regular grid along the flight paths.

# Define grid extent and spacing (60 m)

dx = 60

x_vals = np.arange(745500, 748500, dx) # X coordinates in MGA50, sampled every 60 m

y_vals = np.arange(6415500, 6418500, dx) # Y coordinates in MGA50, sampled every 60 m

x_grid, y_grid = np.meshgrid(x_vals, y_vals)

# Build KDTree for nearest-neighbor lookup

tree = cKDTree(mag_locs[:, :2])

# For each grid node, finds the nearest actual survey point

_, nearest_idx = tree.query(np.c_[x_grid.ravel(), y_grid.ravel()])

# Build new downsampled dataset by selecting the closest survey point to each grid node

mag_survey = np.c_[mag_locs, mag_data][nearest_idx, :]

print(f"Number of Data points in the Original survey: {len(mag_data)} points")

print(f"Number of Data points in the Downsampled survey: {len(mag_survey)} points")

Number of Data points in the Original survey: 4042 points

Number of Data points in the Downsampled survey: 2500 points

# Plot downsampled magnetic survey points colored by residual magnetic data

fig, ax = plt.subplots(figsize=(10, 12))

sc = ax.scatter(

mag_survey[:, 0], # Easting

mag_survey[:, 1], # Northing

c=mag_survey[:, -1], # Residual magnetic intensity (nT)

cmap="RdBu_r",

s=80,

marker="o",

edgecolors="k",

linewidths=0.1, # Thin black edge for better visibility

)

# Add colorbar

cbar = plt.colorbar(sc, ax=ax, orientation="horizontal", pad=0.1, aspect=40)

cbar.set_label("Residual Magnetic Intensity (nT)", fontsize=11)

# Axis settings

ax.set_title("Downsampled Magnetic Data", fontsize=14)

ax.set_xlabel("Easting (MGA50)", fontsize=11)

ax.set_ylabel("Northing (MGA50)", fontsize=11)

ax.set_aspect("equal")

ax.grid(True, linestyle="--", alpha=0.5)

plt.tight_layout()

plt.show()

Inversion#

We proceed with the inversion of the airborne magnetic data. Our goal is to model the shape, estimated susceptibility, and position of magnetized geological units in 3D.

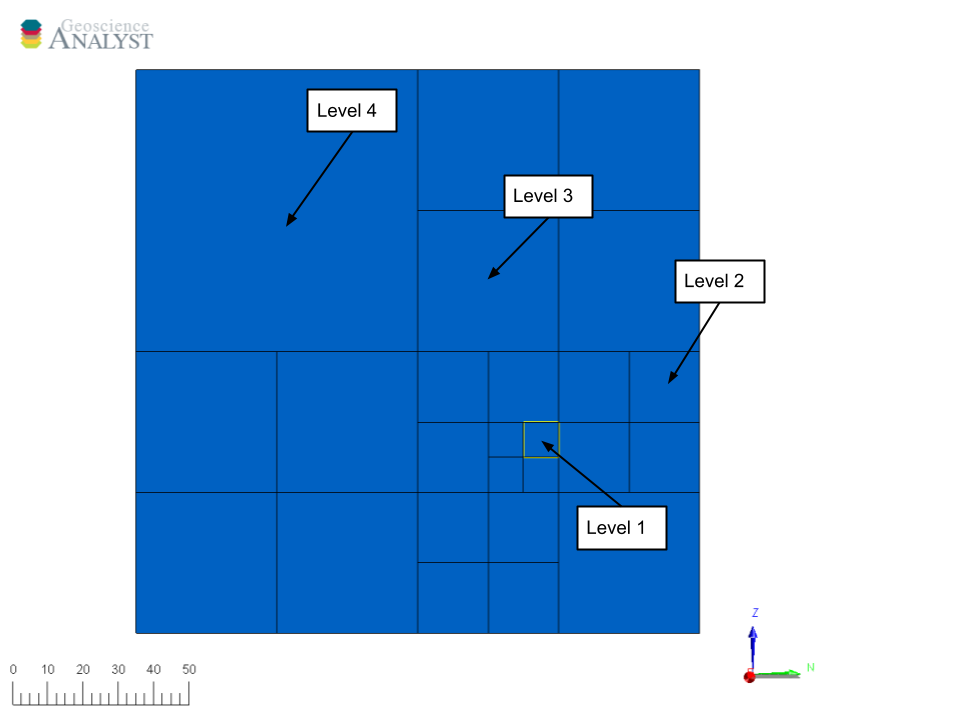

Create a Mesh#

To prepare for inversion, we need to discretize the subsurface into a 3D mesh (grid of cells) under the following constraints:

Use as few cells as possible to remain computationally efficient

Ensure high resolution in target areas to capture small features

We achieve both goals using an adaptive octree mesh, which allows finer resolution where needed and coarser discretization elsewhere.

Fig. 60 Top-down view of the adaptive octree mesh. Smaller (refined) cells are usually concentrated around magnetic measurement points, where model resolution matters most.#

We will use the function discretize.utils.mesh_builder_xyz to create the adaptive octree mesh around our magnetic measurement points and topography.

This function builds a 3D mesh from a cloud of points (xyz) and a base cell size (h). It supports both tensor and tree (octree) meshes and handles padding, depth control, and expansion automatically.

Function:

discretize.utils.mesh_builder_xyz(xyz, h, padding_distance=None, base_mesh=None, depth_core=None, expansion_factor=1.3, mesh_type='tensor', tree_diagonal_balance=None)

Key parameters:

xyz: Input point cloud (e.g., magnetic measurement points)h: Base cell size in x, y, zdepth_core: Depth of the high-resolution corepadding_distance:[[W, E], [N, S], [Down, Up]]padding around the core (default: no padding)mesh_type='tree': Use octree mesh type.expansion_factor=1.3: For tensor mesh type only.

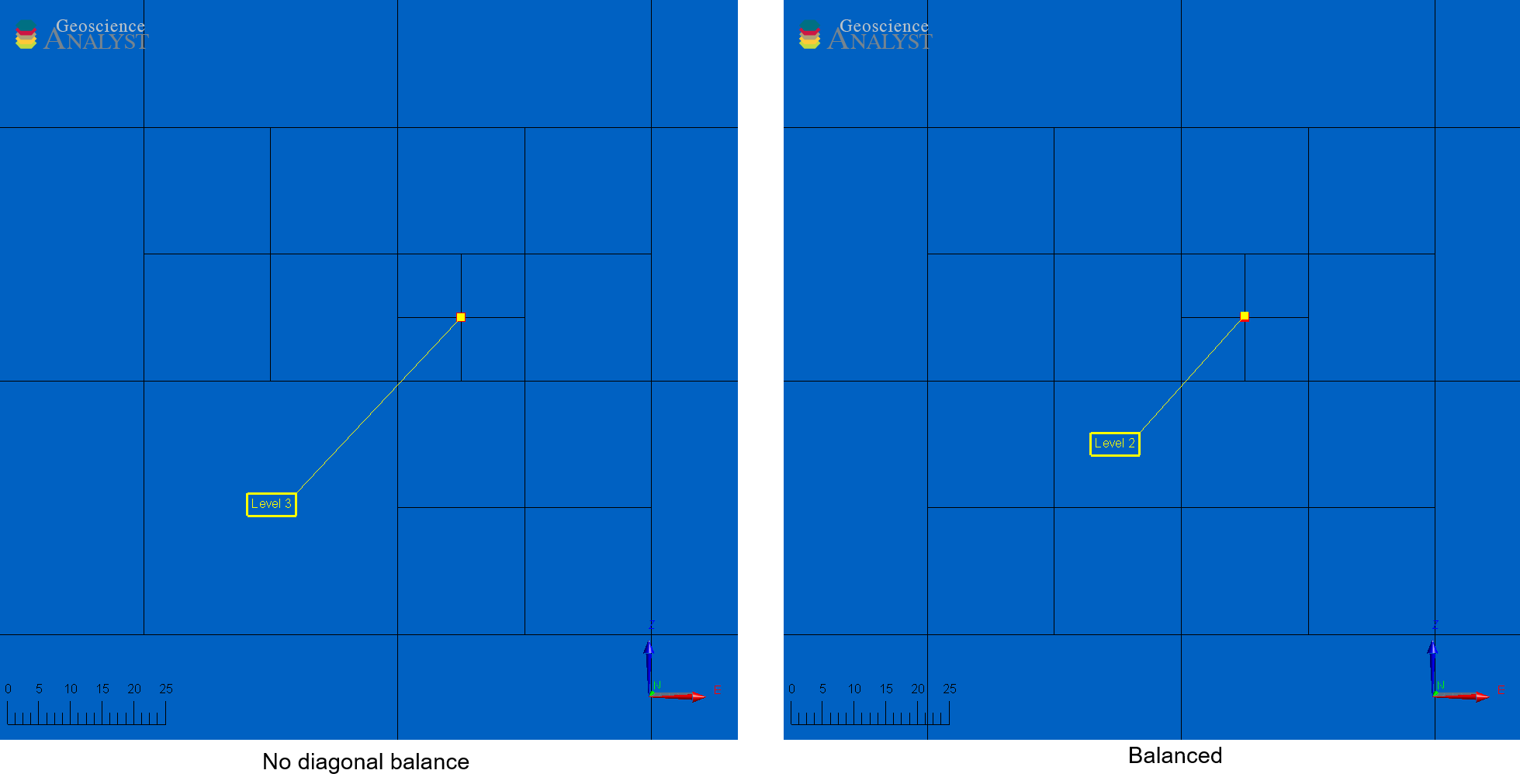

Fig. 61 TreeMesh with and without diagonal balance.#

💡 Note:

Disabling diagonal balance allows abrupt transitions between octree levels (e.g., from 1 to 3), reducing cell count but potentially decreasing simulation accuracy when solving partial-differential equations.

Padding is usually added to capture long-wavelength signals and minimize edge effects. A common guideline is to pad by at least the lateral extent of the data coverage. Here, the target anomaly is well-centered and spectrally isolated, with no significant regional trends. Therefore, padding is omitted to reduce computational cost.

# Define the base cell size in x, y, and z directions (in meters)

# Typically set to half the survey flight height and/or data resolution (the one that is smallest).

dh = 30 # Base cell size in meters (same in x, y, and z)

base_cells = [dh, dh, dh] # [dx, dy, dz] base mesh cell width in meters

# Create base TreeMesh (Octree) covering the full extent of the data

# Start with no refinements

mag_octree = discretize.utils.mesh_builder_xyz(

mag_survey[:, :3], # Use re-sampled magnetic dataset coordinates for domain extent

base_cells, # Base cell size in X, Y, and Z directions

mesh_type="tree", # Use adaptive octree mesh

depth_core=2000, # The mesh will be at least as deep as 2000 m

# padding_distance=[[2000,2000],[1750,1750],[2000,500]] # Padding (not used here, but can be specified for padding around the domain)

)

# Refine the mesh around receiver locations (re-sampled magnetic survey points)

# Function designed for points specifically

mag_octree.refine_points(

mag_survey[:, :3], # Refine around re-samp mag survey points

level=-1, # Start at the (last) highest level, i.e., the base cell size.

padding_cells_by_level=[

6,

6,

6,

], # Number of cells at 30 m, 60 m and 120 m, i.e., 6 cells for each level 1, 2, and 3

finalize=False, # Don't finalize yet. We'll add refinement to topography.

)

# Refine along topography

# Function designed for surfaces specifically

mag_octree.refine_surface(

dem,

level=-3, # Start at level -3, i.e., only refine at 120 m around DEM.

padding_cells_by_level=[1], # Number of cells at 120 m, which is level -3

finalize=True, # Complete the mesh on our last call

)

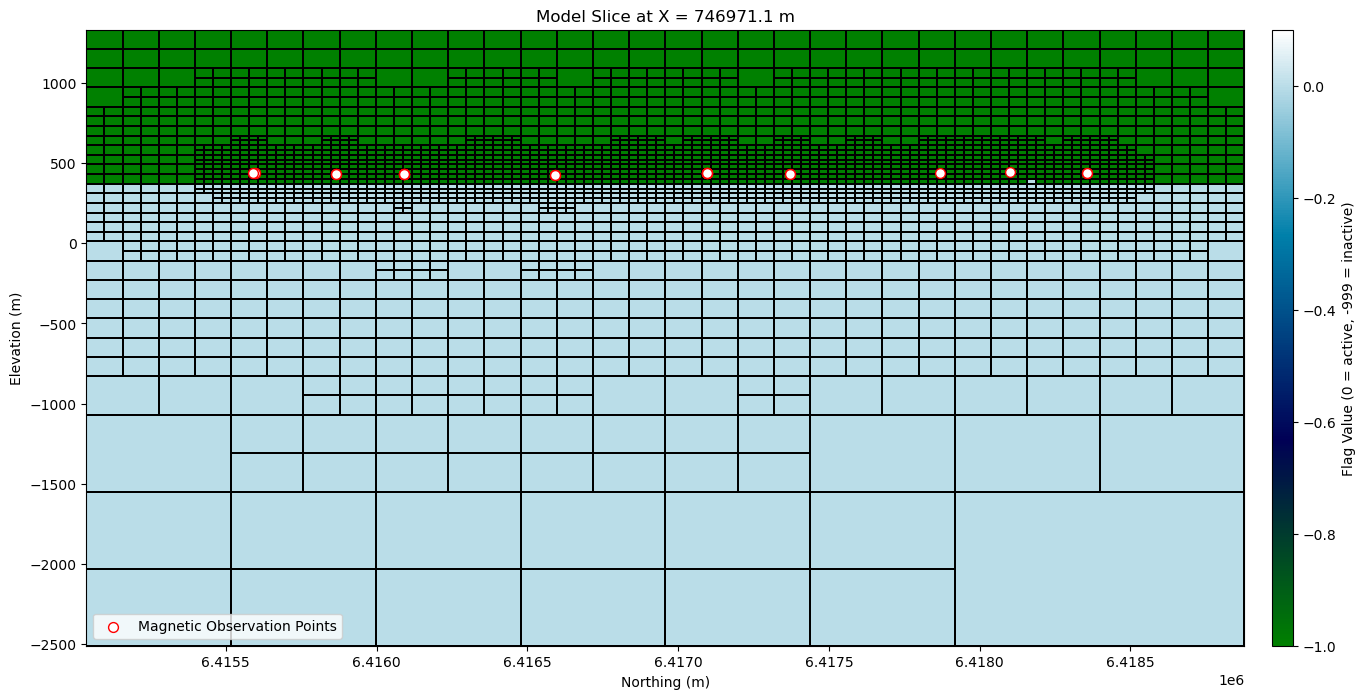

Define Active Cells#

In magnetic modeling, only cells below the Earth’s surface influence the simulated magnetic response. These are called active cells. In contrast, air cells above the surface do not contribute and are excluded from the forward simulation and inversion.

SimPEG uses a boolean mask to identify active versus inactive cells. This is typically done using the discretize.utils.active_from_xyz utility, which compares the mesh to the topography.

Active cells: below topography, used in forward simulation/inversion.

Inactive cells: above topography, ignored to save computation.

The result is a bool array (our object named active) the same size as the number of mesh cells, indicating which are active.

# Identify active (subsurface) cells based on the DEM surface

# This flags all cells whose centers lie below the topography

active = discretize.utils.active_from_xyz(mag_octree, dem)

# Count the number of active (non-air) cells

n_actives = int(active.sum())

print(f"Number of active (non-air) cells: {n_actives}")

Number of active (non-air) cells: 83144

Plot a mesh slice#

print(

f"Cheking X range of stations: min = {mag_survey[:, 0].min()}, max = {mag_survey[:, 0].max()}"

)

Cheking X range of stations: min = 745486.1, max = 748455.3

# Create a model with value 0 for active and -999 for inactive cells (as flag) for plotting the TreeMesh

# This will (only) be used to visualize the active cells in the mesh

# mag_octree.nC returns the total number of cells, we create a mesh with -999 assigned to all cells

full_model = np.full(mag_octree.nC, -999.0)

# Then we set value 0 for active cells only

full_model[active] = 0.0

# Plot TreeMesh X-slice with magnetic stations near the slice

fig, ax = plt.subplots(figsize=(18, 8))

# Get index of slice closest to X=746500 of magnetic data

x_slice_idx = np.argmin(np.abs(mag_octree.cell_centers_x - np.median(mag_survey[:, 0])))

x_val = mag_octree.cell_centers_x[x_slice_idx] # For annotation

# Plot model slice

norm = mpl.colors.Normalize(vmin=-1.0, vmax=0.1)

cmap = mpl.cm.ocean

mag_octree.plot_slice(

full_model,

normal="X",

ind=x_slice_idx,

ax=ax,

grid=True,

pcolor_opts={

"cmap": cmap,

"norm": norm,

"linewidth": 0.1, # show mesh grid

},

)

# Add magnetic stations near the slice

tol = mag_octree.h[0].min() # Minimum cell size in Y direction

mask = (

np.abs(mag_survey[:, 0] - x_val) < tol

) # Mask to select points within tolerance of the slice

ax.scatter(

mag_survey[mask, 1], # Y-axis

mag_survey[mask, 2], # Elevation

s=50,

c="white",

edgecolors="red",

label="Magnetic Observation Points",

)

# Final touches

ax.set(

title=f"Model Slice at X = {x_val:.1f} m",

xlabel="Northing (m)",

ylabel="Elevation (m)",

)

ax.legend(loc="lower left", fontsize=10)

# Add colorbar

cbar = fig.colorbar(

mpl.cm.ScalarMappable(norm=norm, cmap=cmap),

ax=ax,

pad=0.02,

aspect=30,

label="Flag Value (0 = active, -999 = inactive)", # Label for the colorbar

)

plt.show()

Define Mapping from the Model to Active Cells#

In SimPEG, the model refers to a set of parameters, which are not always the same as physical property values directly defined on the mesh. For example, the model might represent:

Logarithms of physical properties (e.g.,

log(conductivity))Parametric shapes (e.g., layers, ellipsoids)

Or, in our case, magnetic susceptibility values in active cells.

To connect these model parameters to the physical property values on the mesh, SimPEG uses mappings, defined through the simpeg.maps module.

In this case, we are inverting for magnetic susceptibility values directly within the active cells of the mesh. Therefore, we use an IdentityMap, i.e. a simple, one-to-one mapping between model parameters and active cell values.

# Define mapping from model to active cells. Our model consists of a magnetic

# susceptibility value (to be defined) for each cell below the Earth's surface.

model_map = simpeg.maps.IdentityMap(nP=n_actives)

Define the Reference and Starting Models#

In SimPEG, we define two important models for inversion:

Starting model (

m_start): Provides an initial guess for the inversion but doesn’t need to resemble the true subsurface. For magnetic inversion using alinearintegral formulation, the choice of starting model has minimal impact on the result. However, it must not be all zeros, as that would prevent gradient computation during the first iteration. A small constant value is often used.Reference model (

m_ref): Encodes prior geologic knowledge or expectations about the subsurface. If no such prior is available and background structures have been removed from the magnetic data, the reference model is typically zero.

💡 Note:

Both models are defined only over active cells (i.e., below surface) and must be 1D NumPy arrays with length equal to the

number of active cells.

Magnetic susceptibility values are expressed in units of \(SI\). In linear least-square problems, the inversion converges to a unique solution regardless of the starting point, unlike nonlinear cases where the starting model can affect convergence.

# Reference model: zero magnetic susceptibility (main background trend has been removed)

m_ref = np.zeros(n_actives)

# Starting model: small uniform magnetic suceptibility across all active cells as an initial guess

m_start = np.ones(active.sum()) * 1e-4

# Print the starting model shape and values for verification

print(

f"Starting model shape (active cells): {m_start.shape}, with {m_start} mag sus (SI) model\n"

)

# Print the reference model shape and values for verification

print(

f"Reference model shape (active cells): {m_ref.shape}, with {m_ref} mag sus (SI) model"

)

Starting model shape (active cells): (83144,), with [0.0001 0.0001 0.0001 ... 0.0001 0.0001 0.0001] mag sus (SI) model

Reference model shape (active cells): (83144,), with [0. 0. 0. ... 0. 0. 0.] mag sus (SI) model

Define the Survey#

We now define the survey configuration required for magnetic inversion. This involves three main components:

Receivers: Define the 3D coordinates \([X, Y, Z]\) of each observation point and the magnetic component measured. In this case, we use the Total Magnetic Intensity after residual and detrending processing.

Source Field: Generally, the passive or active sources responsible for generating geophysical responses, and their associated receivers. In the scalar magnetic case, it defines the Earth’s inducing magnetic field using the

UniformBackgroundFieldclass. This object sets a constant field strength, inclination, and declination across the entire domain derived from the IGRF model.Survey: Combines the receivers and the source field into a

Surveyobject, which is then passed into the simulation to compute forward responses.

💡 Note:

In geophysical literature, “TMI” may refer to either the raw measured field or the residual field (after IGRF removal).

In SimPEG’s scalar magnetic inversion using

components=["tmi"], the data must be residual and reflect only the geologic response within the inversion volume. While this requirement is not explicitly stated in the User Guide, it is the standard practice in the SimPEG User Tutorials - Magnetics. To prepare the data correctly:

Subtract the IGRF (primary field)

Remove broad regional trends not related to the target geology

Ensure remaining signals originate from structures within the inversion volume

# Use the observation locations and components to define the receivers

# To simulate data, the receivers must be defined as a list

receiver_list = potential_fields.magnetics.receivers.Point(

mag_survey[:, :3], components=["tmi"]

)

# Define the uniform inducing magnetic field (IGRF) as the source

source_field = potential_fields.magnetics.sources.UniformBackgroundField(

receiver_list=receiver_list,

amplitude=59294, # Field strength in nT

inclination=-67.1, # Downward-pointing field

declination=-0.89, # Near-true north direction

)

# Define the survey

survey = potential_fields.magnetics.survey.Survey(source_field)

# check the characteristics of the survey

print(f"Number of data points (nD): {survey.nD}")

print("\nSource field object:")

print(survey.source_field)

print("\nReceiver object:")

print(survey.source_field.receiver_list)

print("\nFirst 5 receiver locations (X, Y, Z):")

print(receiver_list.locations[:5, :])

Number of data points (nD): 2500

Source field object:

<simpeg.potential_fields.magnetics.sources.UniformBackgroundField object at 0x000001F42B13F220>

Receiver object:

[<simpeg.potential_fields.magnetics.receivers.Point object at 0x000001F42B13F130>]

First 5 receiver locations (X, Y, Z):

[[7.45503300e+05 6.41559700e+06 4.27444159e+02]

[7.45552600e+05 6.41559850e+06 4.29688324e+02]

[7.45620100e+05 6.41560150e+06 4.30000000e+02]

[7.45685400e+05 6.41560150e+06 4.30628693e+02]

[7.45735100e+05 6.41560150e+06 4.28998169e+02]]

Define the Forward Simulation#

We now define how to simulate the physical (forward) response of our model at the receiver locations.

In SimPEG, this is done by creating a simulation object. In this case, using the 3D integral formulation.

The simulation object must be linked to:

The

survey* (receiver locations and data type)The

mesh(discretization of the model domain)The

active cells(subsurface-only)The

model mapping(from model parameters to physical properties)

These elements are passed as arguments to the simulation class. Additional parameters can be configured to control solver behavior and memory usage.

# Define the forward simulation

simulation = potential_fields.magnetics.simulation.Simulation3DIntegral(

survey=survey,

mesh=mag_octree,

active_cells=active,

chiMap=simpeg.maps.IdentityMap(nP=n_actives),

)

Define Data and Uncertainties#

We can create a SimPEG.Data that defines the observed data and uncertainties.

Uncertainty can be specified in one of two ways:

A combination of

relative_error(percent of each datum) andnoise_floor(fixed small value to avoid numerical instability near zero)A direct

standard_deviationvalue per datum, which defines the total uncertainty (𝜀) assuming Gaussian noise

By setting standard_deviation, we bypass the combination of relative and floor errors and explicitly provide the expected noise level.

💡 Note:

For magnetic data, the uncertainty floor can be chosen based on some knowledge of the instrument error, or as some fraction of the largest anomaly value.

We generally avoid assigning percent uncertainties because the inversion prioritizes fitting the background over fitting anomalies.

In this tutorial, we use \(2\)% of the maximum magnetic anomaly. For magnetic gradiometry data, different floors may be set per component.

In SimPEG, magnetic anomaly values are in units

nT.

# Observed data

dobs = mag_survey[:, -1] # Observed Magnetic Intensity values (nT)

# using righ-handed coordinate system required in SimPEG

# Assigning uncertainty

maximum_anomaly = np.max(np.abs(dobs)) # Maximum absolute value of the magnetic anomaly

floor_uncertainty = 0.02 * maximum_anomaly # Fixed uncertainty (noise floor)

uncertainties = floor_uncertainty * np.ones(

np.shape(dobs)

) # Assign a constant uncertainty to all data points

print(f"Absolute Maximum Anomaly: {maximum_anomaly} nT")

print(f"Assigned Uncertainty: {floor_uncertainty} nT")

# Create a Data object linking the survey, observations, and assumed noise level

data = simpeg.Data(

survey=survey, # The survey object defining the survey geometry; i.e. sources, receivers, data type.

dobs=dobs, # Observed Magnetic Intensity values (nT)

standard_deviation=uncertainties, # Fixed uncertainty (standard deviation of Gaussian noise) per datum in data units (nT)

)

Absolute Maximum Anomaly: 2961.5999999999985 nT

Assigned Uncertainty: 59.23199999999997 nT

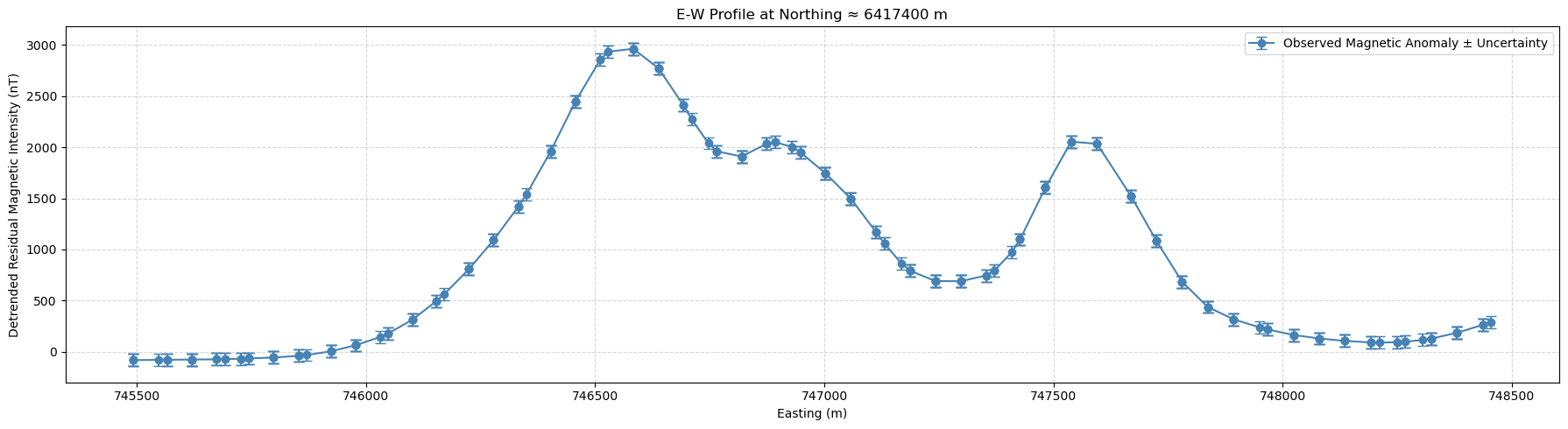

# Plot magnetic profile and assigned uncertainties

# Choose a Northing value to extract the E-W profile and

# a tolerance to select points near that Y value

target_northing, tolerance = 6417400, 100 # Y coordinate and tolerance both in meters

# Create mask to select points close to the target line

line_mask = np.abs(mag_survey[:, 1] - target_northing) < tolerance

# Extract data along the flight line

x_line = mag_survey[line_mask, 0] # Easting

dobs_line = dobs[line_mask] # Magnetic data

err_line = uncertainties[line_mask] # Uncertainty (error bars)

# Sort by Easting (for better plot)

sort_idx = np.argsort(x_line)

x_line = x_line[sort_idx]

dobs_line = dobs_line[sort_idx]

err_line = err_line[sort_idx]

# Plot

plt.figure(figsize=(18, 5))

plt.errorbar(

x_line,

dobs_line,

yerr=err_line,

fmt="o-",

color="steelblue",

capsize=4,

label="Observed Magnetic Anomaly ± Uncertainty",

)

plt.xlabel("Easting (m)"), plt.ylabel("Detrended Residual Magnetic Intensity (nT)")

plt.title(f"E-W Profile at Northing ≈ {target_northing} m")

plt.grid(True, linestyle="--", alpha=0.5)

plt.legend()

plt.tight_layout()

plt.show()

The Objective Function#

Data Misfit Function#

In inversion, we seek a model \( \mathbf{m} \) that best explains the observed data.

This is quantified by the data misfit function.

The objective function is:

Where:

\( \phi_d \) is the data misfit

\( \phi_m \) is the regularization

\( \beta \) balances data fit and model complexity

The data misfit \( \phi_d \), using a least-squares formulation, is defined as:

With:

\( \mathbf{d}_{\text{obs}} \) = observed data

\( \mathbf{d}_{\text{pred}} \) = predicted data from the forward model

\( W_d \) = diagonal weighting matrix, typically \( 1 / \epsilon_i \), where \( \epsilon_i \) is the uncertainty for each datum

# Define the data misfit function

# Quantifies the difference between observed and predicted data

# using the L2 norm, which is suitable for Gaussian noise assumptions.

data_misfit = simpeg.data_misfit.L2DataMisfit(data=data, simulation=simulation)

Regularization Function#

To stabilize the inversion and constrain the solution, we add a regularization term \(\phi_m\) that incorporates prior assumptions about the model.

We use SimPEG’s Sparse regularizer, which allows a weighted least squares formulation. It uses an Iteratively Reweighted Least Squares (IRLS) strategy to promote sparsity in the model and adapt the weighting during inversion.

The regularization combines:

A smallness term to keep the model close to a reference (here, magnetic susceptibility equal to 0.0 \(SI\))

A smoothness term to control spatial variations in the x, y, and z directions

In our setup:

norms = [0, 2, 2, 2]0-norm for smallness encourages compact bodies2-norm for smoothness enforces smooth, continuous changes in all directions

alpha_s,alpha_x,alpha_y,alpha_zcontrol the relative importance of each termUsing the function’s defaults for all terms, but these can be adjusted based on prior knowledge or desired model characteristics

# Define the regularization (Sparse + smoothness)

regularization = simpeg.regularization.Sparse(

mesh=mag_octree, # The octree mesh used for inversion

active_cells=active, # The active cells (subsurface) previously defined

reference_model=m_ref, # Our previously assigned zero susceptibility model m_ref

# Smallness term parameters

# alpha_s=1, # Scaling constant for the smallness regularization term

# Smoothness term parameters

# alpha_x=1, # Scaling constants for the first order smoothness

# alpha_y=1, # along x, y and z, respectively

# alpha_z=1, # If set to None, these scaling constants are set automatically

# according to the value of the length_scale parameters

# length_scale_x=1, # Multiplier constant for smoothness

# length_scale_y=1, # along x,y,z relative to base scale length

# length_scale_z=1, # These are equal to sqrt(alpha_x/base cell size_x) for component X and so on

# Model norms

norms=[0, 2, 2, 2], # L0 norm for smallness, L2 norm for smoothness (x, y, z)

# Must all be within the interval [0, 2].

)

Inversion Directives#

In SimPEG, directives control various steps during the inversion process, such as setting the trade-off parameter \(\beta\), updating weights, and managing the solver.

Directive objects are stored in a list, and SimPEG calls them in sequence at the start and after each model update.

Our setup includes:

UpdateIRLS

Iteratively updates weights for the sparse regularization using Iteratively Reweighted Least Squares (IRLS). This promotescompactorblockystructures. Here, we allow up to 25 IRLS iterations.UpdateSensitivityWeights

Defines how sensitivity-based weights are computed for regularization. These weights help ensure that all cells of the model have a fair chance of being adjusted during inversion, even if their initial sensitivities are low, accounting for the natural decay in sensitivity with depth or distance from the data. Applied once before inversion (every_iteration=False), ensuring all regions are fairly weighted.BetaEstimate_ByEig

Sets the initial trade-off parameter \(\beta_0\) by analyzing the sensitivity (Jacobian) matrix, which describes how model changes affect the predicted data. It selects the largest eigenvalue of this matrix and multiplies it bybeta0_ratio = 10, ensuring that the data misfit and regularization term start on comparable scales in the objective function. This avoids overfitting or underfitting early in the inversion and leads to a more stable solution.UpdatePreconditioner

Updates the matrix preconditioner used by the solver to speed up convergence of the linear system.

Recomputing it (update_every_iteration=True) ensures efficient solving as the model evolves during inversion.

These directives are grouped into a directives_list and passed to the inversion routine.

For this inversion with a non-L2 norm, we don’t use the BetaSchedule and TargetMisfit directives. Here, the beta cooling schedule can be set in the UpdateIRLS directive using the coolingFactor and coolingRate properties. The target misfit for the L2 portion of the IRLS approach can be set with the chifact_start property. If not specified, defaults for the function are used.

💡 Note:

The order matters. SimPEG will raise an error if directives are incorrectly ordered.

A preconditioner transforms the inversion system into an equivalent form with better numerical properties, enabling the solver to converge faster and more reliably.

# Set up inversion directives

# Directive 1 - Set up IRLS to promote sparsity in the model

update_irls = simpeg.directives.UpdateIRLS(

# cooling_factor=2, # Default values but you can decide if you want something different

# cooling_rate=1,

# chifact_start=1.0,

max_irls_iterations=25

)

# Directive 2 - Apply sensitivity-based weighting

sensitivity_weights = simpeg.directives.UpdateSensitivityWeights(every_iteration=False)

# Directive 3 - Estimate initial trade-off parameter β₀ from largest eigenvalue of the Jacobian

starting_beta = simpeg.directives.BetaEstimate_ByEig(beta0_ratio=10)

# Directive 4 - Update preconditioner at every iteration for faster convergence

update_jacobi = simpeg.directives.UpdatePreconditioner(update_every_iteration=True)

# Directive 5 - Track and save output at each iteration

save_out = simpeg.directives.SaveOutputEveryIteration(

name="iterations_magnetics"

) # Saves in the current working directory

# Directives in required order

directives_list = [

update_irls, # IRLS loop for sparsity

sensitivity_weights, # Must come before beta

starting_beta, # Needs sensitivity weights

update_jacobi, # Solver preconditioning

save_out, # Save models and predicted data at every iteration

]

Define the Optimization Algorithm and Run the Inversion#

We now configure and run the inversion by combining the components defined earlier.

ProjectedGNCGoptimizerA projected gradient-based solver suitable for large linear and/or non-linear problems.

This opimization class allows the user to set upper and lower bounds for the recovered model using the

upperandlowerproperties inside the function. We set thelowerbound to zero for positive magnetic susceptibility values, and no upper bound.Here, we will limit the number of iterations to 25.

BaseInvProblem

Combines the data misfit, regularization, and optimization strategy into a single inversion object.BaseInversion

Wraps the inversion problem and applies the directive list to control updates (e.g., \(\beta\), IRLS, preconditioner).Starting model

We start with a uniform model with susceptibility of \(1 \times 10^{-4}\) SI across all active cells.

# Define the optimization algorithm

optimizer = simpeg.optimization.ProjectedGNCG(

lower=0.0, # Apply a lower bound to the model

maxIter=25,

)

# Define an inverse problem, the inversion and run

inv_problem = simpeg.inverse_problem.BaseInvProblem(

data_misfit, regularization, optimizer

)

# The inversion object

inversion = simpeg.inversion.BaseInversion(inv_problem, directives_list)

# Run the inversion

rec_model = inversion.run(m_start) # m_start is the initial model previously defined

Running inversion with SimPEG v0.22.2.dev43+gcd269b4c1.d20241026

[########################################] | 100% Completed | 21.24 s

simpeg.SaveOutputEveryIteration will save your inversion progress as: '###-iterations_magnetics-2025-08-07-12-24.txt'

model has any nan: 0

=============================== Projected GNCG ===============================

# beta phi_d phi_m f |proj(x-g)-x| LS Comment

-----------------------------------------------------------------------------

x0 has any nan: 0

0 1.08e+01 4.60e+05 1.26e+00 4.60e+05 2.94e+05 0

1 5.42e+00 2.30e+05 7.12e+03 2.69e+05 8.54e+04 0

2 2.71e+00 1.47e+05 1.75e+04 1.94e+05 4.83e+04 0 Skip BFGS

3 1.35e+00 9.09e+04 3.22e+04 1.34e+05 3.02e+04 0 Skip BFGS

4 6.77e-01 5.10e+04 5.28e+04 8.68e+04 1.74e+04 0 Skip BFGS

5 3.38e-01 2.64e+04 7.80e+04 5.28e+04 9.96e+03 0 Skip BFGS

6 1.69e-01 1.29e+04 1.05e+05 3.07e+04 5.51e+03 0 Skip BFGS

7 8.46e-02 5.93e+03 1.32e+05 1.71e+04 3.33e+03 0 Skip BFGS

Reached starting chifact with l2-norm regularization: Start IRLS steps...

irls_threshold 0.3318387887373891

8 8.46e-02 2.45e+03 2.96e+05 2.75e+04 1.09e+03 0 Skip BFGS

9 5.50e-02 4.55e+03 3.24e+05 2.24e+04 3.33e+03 0

10 4.14e-02 3.31e+03 4.68e+05 2.27e+04 4.18e+03 0

11 3.24e-02 3.07e+03 5.87e+05 2.21e+04 8.85e+02 0

12 3.24e-02 2.50e+03 7.60e+05 2.71e+04 3.03e+02 0

13 2.22e-02 4.04e+03 7.68e+05 2.11e+04 3.91e+03 0

14 3.46e-02 2.24e+03 8.55e+05 3.18e+04 4.57e+02 0

15 2.11e-02 5.30e+03 7.99e+05 2.22e+04 2.20e+03 0

16 1.61e-02 3.22e+03 9.49e+05 1.85e+04 8.38e+02 0

17 1.20e-02 3.36e+03 1.20e+06 1.78e+04 8.11e+02 0

18 8.58e-03 3.69e+03 1.22e+06 1.42e+04 1.37e+03 0

19 6.86e-03 2.95e+03 1.22e+06 1.13e+04 6.38e+02 0

20 5.60e-03 2.83e+03 1.13e+06 9.14e+03 4.91e+02 0

21 5.60e-03 2.44e+03 1.15e+06 8.90e+03 3.40e+02 0 Skip BFGS

22 4.54e-03 2.87e+03 1.05e+06 7.65e+03 5.23e+02 0

23 4.54e-03 2.41e+03 1.14e+06 7.57e+03 3.28e+02 0

24 4.54e-03 2.73e+03 1.04e+06 7.48e+03 4.25e+02 0

Minimum decrease in regularization. End of IRLS

------------------------- STOP! -------------------------

1 : |fc-fOld| = 0.0000e+00 <= tolF*(1+|f0|) = 4.6032e+04

0 : |xc-x_last| = 1.7199e+00 <= tolX*(1+|x0|) = 1.0288e-01

0 : |proj(x-g)-x| = 4.2544e+02 <= tolG = 1.0000e-01

0 : |proj(x-g)-x| = 4.2544e+02 <= 1e3*eps = 1.0000e-02

1 : maxIter = 25 <= iter = 25

------------------------- DONE! -------------------------

Results#

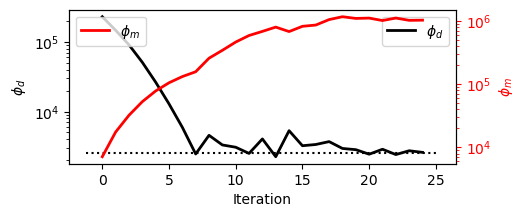

Analysing Convergence#

Plot data misfit and model norms at each iteration to assess convergence and stability. Keep it in mind that \(\phi(m) = \phi_d + \beta \, \phi_m\) and a beta cooling schedule is applied during the inversion.

# Plot the data misfit and and model norm evolution across iterations

# Uses SimPEG plotting utilities (basic plotting)

save_out.plot_misfit_curves(dpi=300)

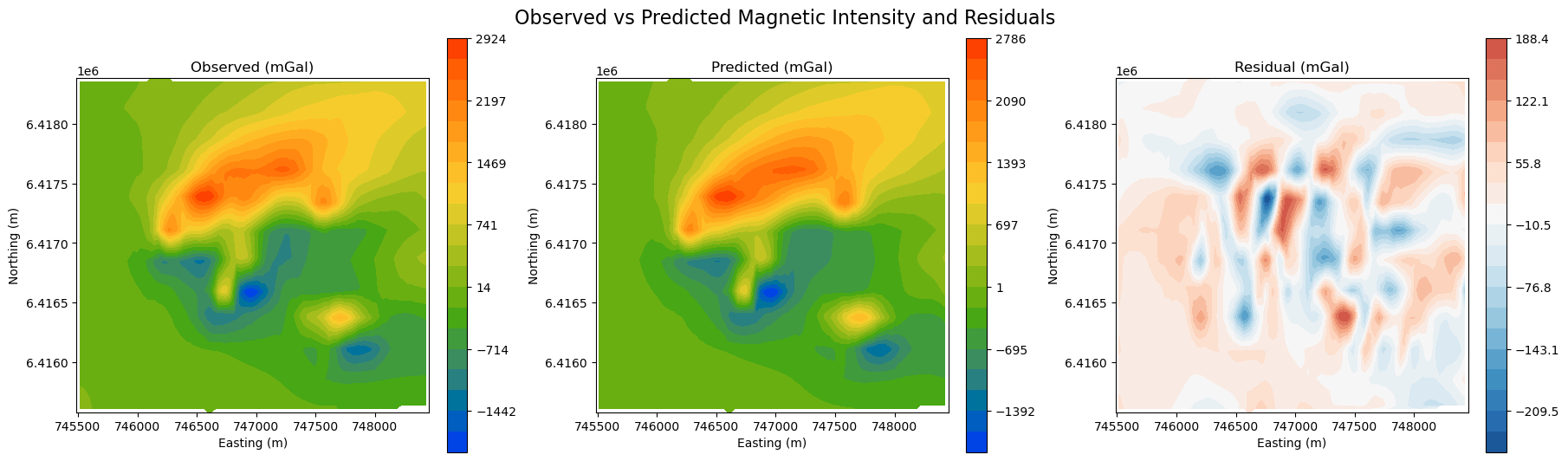

Data fit#

Map view#

Before interpreting the results, it’s essential to validate the quality of the data fit. We want to make sure that most of the signal is captured, leaving only behind random noise (residuals).

⚠️ Without a good fit to the data, any interpretation of the recovered 3D density model may be misleading.

# Validate observed vs predicted vs standardized residual

fig, axes = plt.subplots(1, 3, figsize=(18, 5), constrained_layout=True)

titles = ["Observed (mGal)", "Predicted (mGal)", "Residual (mGal)"]

dpred = inv_problem.dpred[0] # Predicted data

dobs = mag_survey[:, -1] # Observed data

residual = dobs - dpred # Raw residual

data_list = [dobs, dpred, residual]

for ax, title, data in zip(axes, titles, data_list, strict=False):

if data is residual:

vlim = np.nanmax(np.abs(data))

norm = mcolors.TwoSlopeNorm(vmin=-vlim, vcenter=0, vmax=vlim)

contourOpts = {"cmap": "RdBu_r", "norm": norm}

else:

contourOpts = {"cmap": cc.m_CET_R2}

im = plot2Ddata(

mag_survey[:, :2], data, ax=ax, ncontour=20, contourOpts=contourOpts

)

ax.set_title(title)

ax.set_xlabel("Easting (m)")

ax.set_ylabel("Northing (m)")

plt.colorbar(im[0], ax=ax, orientation="vertical")

plt.suptitle(

"Observed vs Predicted Magnetic Intensity and Residuals", fontsize=16, y=1.05

)

plt.show()

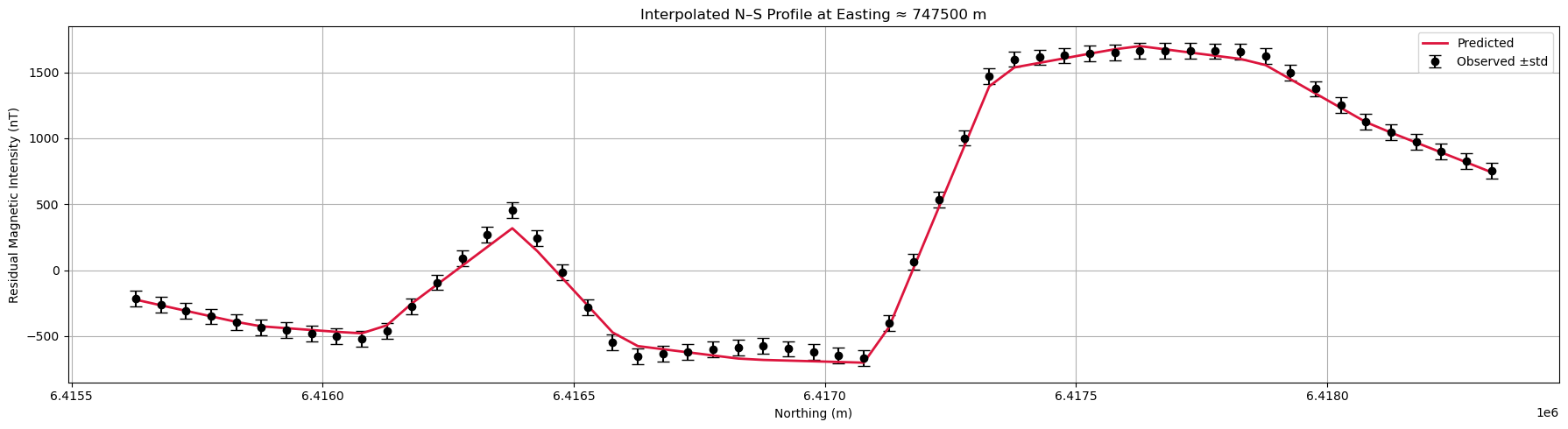

Profile Plot#

It is always useful to plot the results along selected profiles to visualize the observed and predicted data and data uncertainties. It helps with assessing the quality of the fit, potential overfit or underfit.

# Inputs

xy, obs, pred, err = mag_survey[:, :2], mag_survey[:, -1], dpred, uncertainties

profile_east, grid_res = 747500, 50 # Profile location and resolution

# Attention: This is an interpolated profile plot, not a direct data extraction

# Create regular grid

xi = np.arange(xy[:, 0].min(), xy[:, 0].max(), grid_res)

yi = np.arange(xy[:, 1].min(), xy[:, 1].max(), grid_res)

grid_x, grid_y = np.meshgrid(xi, yi)

# Interpolate to grid

obs_grid = griddata(xy, obs, (grid_x, grid_y), method="linear")

pred_grid = griddata(xy, pred, (grid_x, grid_y), method="linear")

err_grid = griddata(xy, err, (grid_x, grid_y), method="linear")

# Extract profile at closest Easting

col_idx = np.argmin(np.abs(xi - profile_east))

y_profile = yi

obs_profile = obs_grid[:, col_idx]

pred_profile = pred_grid[:, col_idx]

err_profile = err_grid[:, col_idx]

# Remove NaNs (from interpolation gaps)

mask = ~np.isnan(obs_profile) & ~np.isnan(pred_profile) & ~np.isnan(err_profile)

y_profile, obs_profile, pred_profile, err_profile = (

y_profile[mask],

obs_profile[mask],

pred_profile[mask],

err_profile[mask],

)

# Plot

plt.figure(figsize=(18, 5))

plt.errorbar(

y_profile,

obs_profile,

yerr=err_profile,

fmt="o",

color="k",

capsize=5,

label="Observed ±std",

)

plt.plot(y_profile, pred_profile, "-", color="crimson", lw=2, label="Predicted")

plt.xlabel("Northing (m)")

plt.ylabel("Residual Magnetic Intensity (nT)")

plt.title(f"Interpolated N-S Profile at Easting ≈ {profile_east} m")

plt.grid(True)

plt.legend()

plt.tight_layout()

plt.show()

While there might still be some correlated signal in the residuals, overall the data fit is good. We can move on to interpretating the model.

Models#

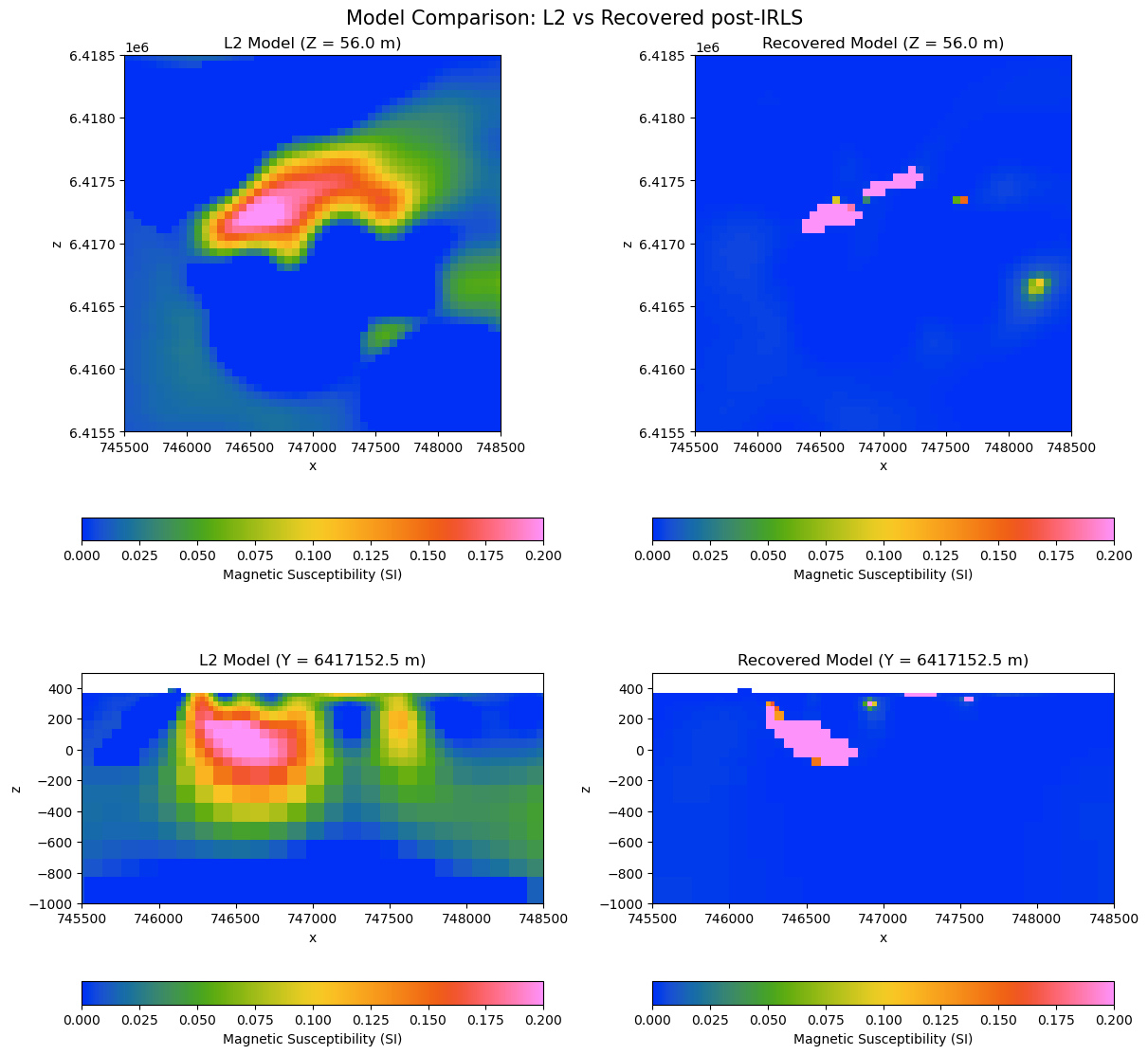

The inversion took 7 iterations to reach the target misfit, followed by 16 iterations to increase sparsity. The figure below shows horizontal and vertical sections through both solutions.

See the Regularization Section for more details on sparsity constraints.

# Map models to full mesh with inactive cells set to np.nan

plotting_map = simpeg.maps.InjectActiveCells(mag_octree, active, np.nan)

model_l2 = plotting_map * inv_problem.l2model

model_rec = plotting_map * rec_model

# Slice indices to plot

z_ind = 85

y_ind = 70

z_val = mag_octree.cell_centers_z[z_ind]

y_val = mag_octree.cell_centers_y[y_ind]

# Plot limits

extent_xy = [745500, 748500]

extent_z = [-1000, 500]

# Set up 2x2 plot layout

fig, axes = plt.subplots(

2,

2,

figsize=(12, 11),

constrained_layout=True,

gridspec_kw={"height_ratios": [1.2, 1]},

)

# Define slices to plot

slices = [

(model_l2, "Z", z_ind, axes[0, 0], f"L2 Model (Z = {z_val:.1f} m)"),

(model_l2, "Y", y_ind, axes[1, 0], f"L2 Model (Y = {y_val:.1f} m)"),

(model_rec, "Z", z_ind, axes[0, 1], f"Recovered Model (Z = {z_val:.1f} m)"),

(model_rec, "Y", y_ind, axes[1, 1], f"Recovered Model (Y = {y_val:.1f} m)"),

]

# Plot all slices

for model, normal, ind, ax, title in slices:

im = mag_octree.plot_slice(

model,

normal=normal,

ind=ind,

ax=ax,

pcolor_opts={"cmap": cc.m_CET_R1, "vmax": 0.2},

)

ax.set_title(title, fontsize=12)

ax.set_xlim(extent_xy)

ax.set_ylim([6415500, 6418500] if normal == "Z" else extent_z)

ax.set_aspect("equal")

plt.colorbar(

im[0],

ax=ax,

orientation="horizontal",

pad=0.1,

label="Magnetic Susceptibility (SI)",

)

fig.suptitle("Model Comparison: L2 vs Recovered post-IRLS", fontsize=15)

plt.show()

Results#

The smooth model (up to iteration 8) reveals a broad, continuous zone of elevated magnetic susceptibility at depth. The geometry and position of this anomaly closely match those identified in the gravity inversion, including the presence of smaller “fuzzy” anomalies at the margins.

In contrast, the compact model (from 9 to 22) produces a sharply defined, high-susceptibility body embedded within a relatively uniform background. Here, susceptibility contrasts are more pronounced, reaching values up to ~2.0 SI, and the target structure stands out clearly from surrounding geology.